After months of loudly insisting that there was simply no way to get into San Bernardino shooter Syed Rizwan Farook’s discarded iPhone without Apple’s help, the FBI announced yesterday that it had successfully accessed the phone. It took the FBI and an unnamed third party one week to hack it. So much for impossible.

It’s hard to tell whether the FBI truly didn’t know until last week that the iPhone was hackable, or whether it decided at the last minute to back out of a precedent-setting case that it looked increasingly likely to lose. Either way, the San Bernardino iPhone case should never have gotten to this point. It should never have even started down this particular road. And it wouldn’t have, if the FBI were any good at its job.

The Apple–FBI fight has sparked healthy conversations around encryption, and dark spaces, and the primacy of a phone’s security versus court-approved searches. What it’s done most of all, though, is highlight the cybersecurity incompetence of our preeminent domestic intelligence agency. The FBI getting into that phone isn’t the real problem. It’s that it had to ask for a shortcut.

We’ve been through this before. Even if you don’t follow this sort of thing closely, you might have guessed that encryption isn’t a new concept. It’s been around for decades, as have various governmental attempts to get around it.

There’s no direct historical parallel to the FBI’s recent misadventures, but there have been similarly high-profile cases. Most notably, in the early ‘90s, the NSA created something called a “Clipper Chip,” a microchip meant to be embedded in consumer telephones that would provide encryption (good!) along with a built-in “backdoor” that would let government spies listen in at will (not so good!).

The reaction was largely the same then as it has been to the Apple case today. Privacy advocates yelped about state surveillance, as well as the program’s ultimate futility. In the same way the FBI has no control over Samsung or other companies based outside the U.S. today, the NSA couldn’t compel, say, Panasonic to sign up for a Clipper Chip in its landlines in the ‘90s. The bad guys, then, would just buy Panasonic. Within a year of the Clipper Chip reveal, a computer scientist named Matt Blaze found a vulnerability in its security measures that would have let anyone with enough hacking savvy break its vaunted encryption. The government killed the program before it really started.

The Clipper Chip exposed the real weakness of trying to take shortcuts with encryption: There are none. You can’t build a backdoor that only one person or agency has access to. Once it’s open, anyone with enough time, determination, and know-how can walk right through. A group of over a dozen computers scientists, including Blaze, made this point just last summer, in a definitive paper called “Keys Under Doormats.” Handy metaphor!

It’s an instructive period not only because the government ultimately didn’t get what it wanted, but also because it pretty well devastated the NSA’s cybersecurity efforts for nearly a decade. Seymour Hersh outlined just how ill-suited the agency was for the age of encryption in a brutal 1999 New Yorker takedown titled “The Intelligence Gap.” Insular hiring practices and a refusal to evolve left the NSA practically flying blind.

Today, while you can (and should) argue over the legality and ethicality of the NSA all day long, no one can argue that it’s bad at its job. It evolved. It adapted. It invested significant resources in its technological capabilities, and recruited gifted hackers from industry conferences like Def Con, and straight out of elite collegiate math programs. For five years, the NSA employed Charlie Miller, arguably the most gifted Apple hacker alive. (Miller now works at Uber.)

And that’s the way it should be. Security should never stop improving, and law enforcement’s ability to work around it, when necessary and court-approved, should be forced to improve in kind. The NSA has done a lot of unsavory things with its technological chops, but it has them, and it’s gone to great pains to achieve its level of institutional skill and knowledge — just as Apple and its peers have gone to great pains to make their technologies near-impenetrable. It’s an arms race, but it works: Companies are constantly improving their security, and the NSA isn’t guaranteed easy or universal access to private citizens’ data.

And then there’s the FBI.

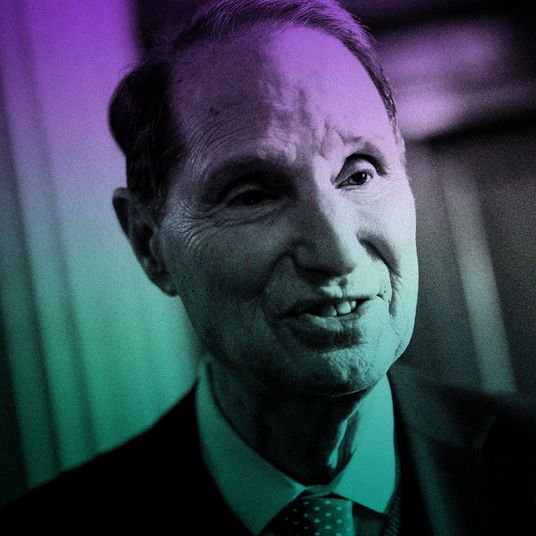

“I have to hire a great workforce to compete with those cyber criminals and some of those kids want to smoke weed on the way to the interview.” That’s a 2014 quote from FBI director James Comey. At the time, his agency had been charged with hiring 2,000 additional personnel, including several with cybersecurity expertise; the FBI has a three-year ban on marijuana. This presented a problem.

It makes for a funny headline, but it’s also … true? At least in the broad sense, regardless of actual drug use, today’s hackers aren’t exactly a cultural fit with Quantico. At least the NSA — well, before Snowden — had some cultural cachet. They may be Big Brother, but they’ve got all the fun toys. The FBI, meanwhile, is what would happen if a literal overstarched suit were given life and tasked with creating a law-enforcement agency. Oh, and they also don’t pay all that great. At least not as well as Uber.

“Just as the NSA had to change in the late 1990s, so must the FBI,” Susan Landau, a professor at Worcester Polytechnic Institute (and co-author of “Keys Under Doormats”), testified to Congress earlier this month. “In fact, that change is long overdue.”

A better-equipped FBI would have helped this case, specifically. In fact, if the FBI hadn’t reset the iCloud password associated with the San Bernardino iPhone within a day of having it in possession, it would have had all the information it wants months ago. We could have avoided all of this entirely.

More important (since there’s almost certainly nothing of value on that one particular iPhone in the first place), an FBI that understands cybersecurity genuinely does make us all safer. Law enforcement shouldn’t be able to break into any phone whenever it wants. But not even Apple, which gives law enforcement access to the information on its servers all the time, would argue that digital “dark spaces” should be impregnable. But they should be dark, and accessing them should be difficult. It should take considerable resources. It should come with a court order. And ideally, it shouldn’t hinge on a third-party forensics contractor stepping forward with the solution.

Calling in a third-party cavalry still beats compelling Apple to help, though. It’s a good enough solution for now. The FBI gets the information it wants, and doesn’t get to set a precedent that would let it force other U.S. companies to weaken their security against their will. The FBI getting into this one iPhone is fine. And, hey! Maybe it found something useful after all.

The FBI shouldn’t, though, get to cheat. It shouldn’t be able to get Apple to do its homework for it, especially when there was so little to gain. It needs to smarten up. It needs to do its job.