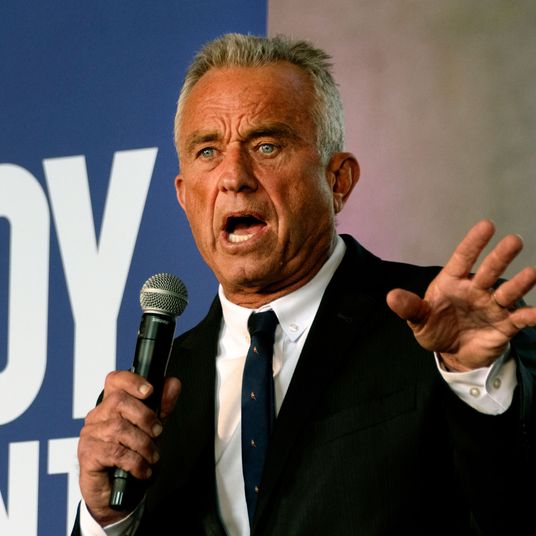

On the eve of the presidential election, Barack Obama said that Facebook’s accommodation of “outright lies” creates a “dust cloud of nonsense.” At a tech conference Thursday, Mark Zuckerberg sort of replied, saying that “voters make decisions based on their lived experience,” and that it’s “a pretty crazy idea” to think that fake news shared on Facebook “influenced the election in any way.” In a Facebook post published late Saturday, Zuckerberg clarified his position, saying that 99 percent of what people share is “authentic” — whatever that means — and that only a “very small amount is fake news and hoaxes.” He maintained that it’s “extremely unlikely” the hoaxes swung the outcome, while also admitting that, yes, there’s room to improve.

“I am confident we can find ways for our community to tell us what content is most meaningful, but I believe we must be extremely cautious about becoming arbiters of truth ourselves,” he wrote. “We hope to have more to share soon, although this work often takes longer than we’d like in order to confirm changes we make won’t introduce unintended side effects or bias into the system.”

The underlying message, though, is that Facebook still considers itself an objective platform. It is one of the deeper ironies of 2016 that Facebook’s understanding of what a commitment to impartiality means has helped to fan the flames of hyperpartisanship, given the “filter bubble” of perspective-validating content that the algorithm is built to encourage, and the “ideological media” that has flourished within that space — with the most flagrant example being Macedonian teens churning out pro-Trump content for clicks and cash. If you have no commitment to truth, it’s a genius strategy: Just one viral hit can keep such a site afloat — not unlike other clickbait news models — and no original reporting is required, offering low overhead and big scale. It all adds to the muddiness of the News Feed, says American University digital-media scholar Deen Freelon: Some posts are outright falsehoods, others are quotes or events taken out of context, someone blurring the line between fact and opinion, and sometimes reputable news orgs get a fact wrong — should they be banned? “It’s an epistemological slurry of information,” Freelon says, “where it’s hard to disentangle truth from falsehood.” And behold, fake news turns into trending stories.

What confounds the mystery is how close to the vest Facebook plays the News Feed and its operating algorithm, a harsh mistress that’s crushed a publisher or two. While Facebook-employed researchers have claimed that it doesn’t have a filter bubble problem (in the journal Science, no less!), independent academics have, in the estimation of University of Southern California researcher Mike Ananny, “persuasively debunked” the finding, citing to unrepresentative sampling and other methodological hand-waving. “The real answer is that we don’t know [the scale of disinformation] because Facebook is not an open platform that makes its data, people, and algorithms available to researchers,” he explained in an email. “We either have to make intelligent guesses, reverse-engineer their systems, or trust the research that they publish on themselves. None of these options is satisfying.”

If Facebook does want to cure its disinformation illnesses, there are plenty of places to start. After talking with a range of media and information scholars, we have a few recommendations.

Take responsibility as a publisher.

The first step, as they say, is admitting you have a problem. The company line from Facebook is that it’s a “platform,” not a publisher. A tech company, not a media company. As University of Wisconsin-Milwaukee internet-ethics scholar Michael Zimmer tells Select All, the company isn’t a neutral information conveyer with no obligation regarding content. “That might work for an old-school phone provider, who simply puts up telephone poles and connects the wires,” he says. “But Facebook is deeply involved in the curation of content — whether from advertising partners, preferred news organizations, or its own algorithmic determinations of what shows up on one’s news feed — and they can’t now try to claim they have no complicity in how information — whether true or false — is disseminated through the network.” Indeed, as a Reuters investigation in October surfaced, the company receives over a million content complaints a day that get routed to content-policing teams in Hyderabad, Austin, Dublin, and Menlo Park. Meanwhile, an elite group of senior executives directs “content policy” and makes editorial decisions, like unbanning iconic war photojournalism. “Facebook has decided it wants to be in the business of what people see and don’t,” says Freelon. “Once you make that decision, you’re a publisher. You’re in the business of gatekeeping.”

Hire people with editorial and sociological backgrounds, and give them a voice.

In May, a Gizmodo investigation reported that “news curators” of the trending topics field routinely suppressed conservative news, a report that led to ditching humans for algorithms for that job. Follow-up reporting indicated that Facebook shelved an update that would preclude more fake news because it “disproportionately impacted right-wing news sites by downgrading or removing that content from people’s feeds,” according to Gizmodo’s Michael Nuñez.

If Facebook does want to tackle the fake-news problem, says Annany, it will need to bring back and expand the human editorial teams, and partner product managers and engineers with people with editorial expertise. Another important step, he says, would be to create a public editor position, similar to the role at the New York Times — someone who acts as a liaison between readers (or users) and the organization itself.

Design for crowdsourcing.

The News Feed itself could be better designed to indicate whether or not a post might be, as a philosopher would say, bullshit: stories that are old or debunked by FactCheck or Snopes could be labeled as such, says Annany. After all, one of the problems is that a fact-check never gets as shared as much as an original hoax.

Rather than only relying on top-down “curation,” Facebook could enlist the help of its many users to identify the worst runoff from the informational slurry. It could be similar to how YouTube monitors content, says Michael Zimmer, the University of Wisconsin-Milwaukee ethicist: create a system that lets users flag disinformation. Then, after a threshold of complaints is reached, an employee could look at the story. But “while YouTube typically removes information flagged as offensive by users, Facebook could actually just employ some kind of icon that warns users that this information might be false,” says. “That way, Zuckerberg’s ideal of letting the information stay on the platform is maintained, but the spread of disinformation might be slowed, or at least made known.” Judging from the content-oversight system that the company already has in place and Zuckerberg’s teasing that the company will “find ways for our community to tell us” about “meaningful” content, that seems like a likely direction forward.

Make the algorithm more transparent. Maybe.

Through the friends you have, the things you click on, and many other factors, Facebook builds a filter bubble around you. But there’s no way of knowing how you’re being sorted. The most shocking case came out thanks to a ProPublica investigation, showing that advertisers could use “ethnic affinity” to exclude users by race. If the algorithm were made more transparent, then users “could understand the kind of profile that was being built around them, how it was sequestering them into a certain space in its platform,” says University of California, Los Angeles, information scientist Safiya Umoja Noble. Users are already being sorted, so why not let them have explicit agency in the process?

Another way to say this is “newsfeed personalization”: rather than having what you see surreptitiously constructed by Facebook, you could turn on filters against disinformation or for vetted news sources, similar to what Instant Articles already does. This way, the settings can be more explicit, rather than automatically, and “objectively,” being totally delivered by an overarching algorithm.

But the “epistemological slurry” presents a problem here, too. Like pornography, disinformation is something that you know when you see it, and beyond the most egregious fake news, it’s hard to systematically sort purposeful disinformation from the merely misinformed. (Just ask Google.) Beyond that, to take action is to risk being called biased: According to the Gizmodo report, Facebook passed on fighting fake news because it feared conservative backlash. “They absolutely have the tools to shut down fake news,” an anonymous source said to Gizmodo, adding that “there was a lot of fear about upsetting conservatives after Trending Topics.”

Since the internet isn’t a limited spectrum and Facebook doesn’t have a complete monopoly, there isn’t a precedent for any sort of governmental regulation. Except for the case of defamation, says Zimmer, disinformation itself is constitutionally protected speech. The best you can hope for, he says, is that the company could be pressured into being more transparent about how it organizes feeds. But not even that is a guarantee, since Facebook could just maintain that the algorithm is a trade secret — the same reason, he says, Google doesn’t have to open up about how its search engine works. While it may serve the public good, it isn’t a public institution, so regulation, if it were to happen, would come slowly. The Electronic Frontier Foundation’s Jillian York wrote in an email that if the company doesn’t take responsibility for disinformation, regulation will be inevitable. “Honestly, it doesn’t look good to me,” she said. “Mark Zuckerberg’s latest statement shows he clearly doesn’t understand the gravity of the situation, and the role of Facebook in most people’s lives.”