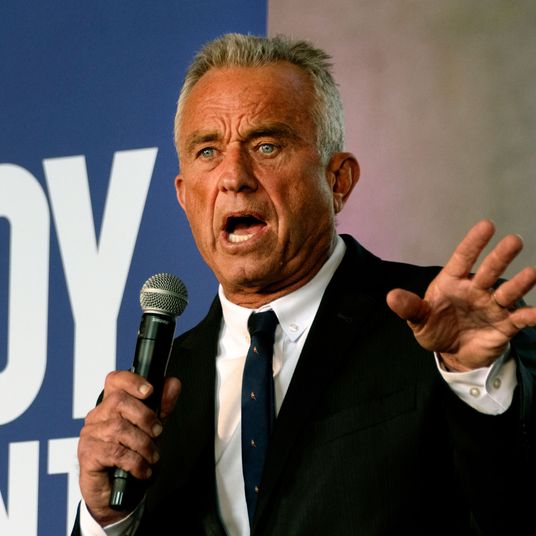

Mark Zuckerberg had just returned from paternity leave, and he wanted to talk about Facebook, democracy, and elections and to define what he felt his creation owed the world in exchange for its hegemony. A few weeks earlier, in early September, the company’s chief security officer had admitted that Facebook had sold $100,000 worth of ads on its platform to Russian-government-linked trolls who intended to influence the American political process. Now, in a statement broadcast live on Facebook on September 21 and subsequently posted to his profile page, Zuckerberg pledged to increase the resources of Facebook’s security and election-integrity teams and to work “proactively to strengthen the democratic process.”

To effect this, he outlined specific steps to “make political advertising more transparent.” Facebook will soon require that all political ads disclose “which page” paid for them (“I’m Epic Fail Memes, and I approve this message”) and ensure that every ad a given advertiser runs is accessible to anyone, essentially ending the practice of “dark advertising” — promoted posts that are only ever seen by the specific groups at which they’re targeted. Zuckerberg, in his statement, compared this development favorably to old media, like radio and television, which already require political ads to reveal their funders: “We’re going to bring Facebook to an even higher standard of transparency,” he writes.

This pledge was, in some ways, the reverse of another announcement the company made earlier the same day, unveiling a new set of tools businesses can use to target Facebook members who have visited their stores: Now the experience of briefly visiting Zappos.com and finding yourself haunted for weeks by shoe ads could have an offline equivalent produced by a visit to your local shoe store (I hope you like shoe ads). Where Facebook’s new “offline outcomes” tools promise to entrap more of the analog world in Facebook’s broad surveillance net, Zuckerberg’s promise of transparency assured anxious readers that the company would submit itself to the established structures of offline politics.

It was an admirable commitment. But reading through it, I kept getting stuck on one line: “We have been working to ensure the integrity of the German elections this weekend,” Zuckerberg writes. It’s a comforting sentence, a statement that shows Zuckerberg and Facebook are eager to restore trust in their system. But … it’s not the kind of language we expect from media organizations, even the largest ones. It’s the language of governments, or political parties, or NGOs. A private company, working unilaterally to ensure election integrity in a country it’s not even based in? The only two I could think of that might feel obligated to make the same assurances are Diebold, the widely hated former manufacturer of electronic-voting systems, and Academi, the private military contractor whose founder keeps begging for a chance to run Afghanistan. This is not good company.

What is Facebook? We can talk about its scale: Population-wise, it’s larger than any single country; in fact, it’s bigger than any continent besides Asia. At 2 billion members, “monthly active Facebook users” is the single largest non-biologically sorted group of people on the planet after “Christians” — and, growing consistently at around 17 percent year after year, it could surpass that group before the end of 2017 and encompass one-third of the world’s population by this time next year. Outside China, where Facebook has been banned since 2009, one in every five minutes on the internet is spent on Facebook; in countries with only recently high rates of internet connectivity, like Myanmar and Kenya, Facebook is, for all intents and purposes, the whole internet.

But, like the internet, Facebook’s vertigo-inducing scale, encompassing not just the sheer size of its user base but the scope of its penetration of human activity — from the birthday-reminder mundane to the liberal-democracy significant — defies comprehension. When I scroll through the news feed on my phone, it’s almost impossible to hold in my mind that the site on which I am currently considering joining a group called “Flat Earth—No Trolls” is the same one whose executives are likely to testify in front of Congress about their company’s role in a presidential election. Or that the site I use to invite people to parties is also at the center of an international controversy over documentation of the ethnic cleansing of Rohingya Muslims in Myanmar.

Facebook has grown so big, and become so totalizing, that we can’t really grasp it all at once. Like a four-dimensional object, we catch slices of it when it passes through the three-dimensional world we recognize. In one context, it looks and acts like a television broadcaster, but in this other context, an NGO. In a recent essay for the London Review of Books, John Lanchester argued that for all its rhetoric about connecting the world, the company is ultimately built to extract data from users to sell to advertisers. This may be true, but Facebook’s business model tells us only so much about how the network shapes the world. Over the past year I’ve heard Facebook compared to a dozen entities and felt like I’ve caught glimpses of it acting like a dozen more. I’ve heard government metaphors (a state, the E.U., the Catholic Church, Star Trek’s United Federation of Planets) and business ones (a railroad company, a mall); physical metaphors (a town square, an interstate highway, an electrical grid) and economic ones (a Special Economic Zone, Gosplan). For every direct comparison, there was an equally elaborate one: a faceless Elder God. A conquering alien fleet. There are real consequences to our inability to understand what Facebook is. Not even President-Pope-Viceroy Zuckerberg himself seemed prepared for the role Facebook has played in global politics this past year. In which case, how can we be assured that Facebook is really safeguarding democracy for us and that it’s not us who need to be safeguarding democracy against Facebook?

Nowhere was this confusion about Facebook’s and Zuckerberg’s role in public life more in evidence than in the rumors that the CEO was planning to run for president. Every year, Zuckerberg takes on a “personal challenge,” a sort of billionaire-scale New Year’s resolution, about which he posts updates to his Facebook page. For most Facebook users, these meticulously constructed and assiduously managed challenges are the only access they’ll ever have to Zuckerberg’s otherwise highly private personal life. Thousands of people cluster in the comments under his status updates like crowds loitering outside Buckingham Palace, praising the CEO, encouraging him in his progress, and drawing portraits of his likeness.

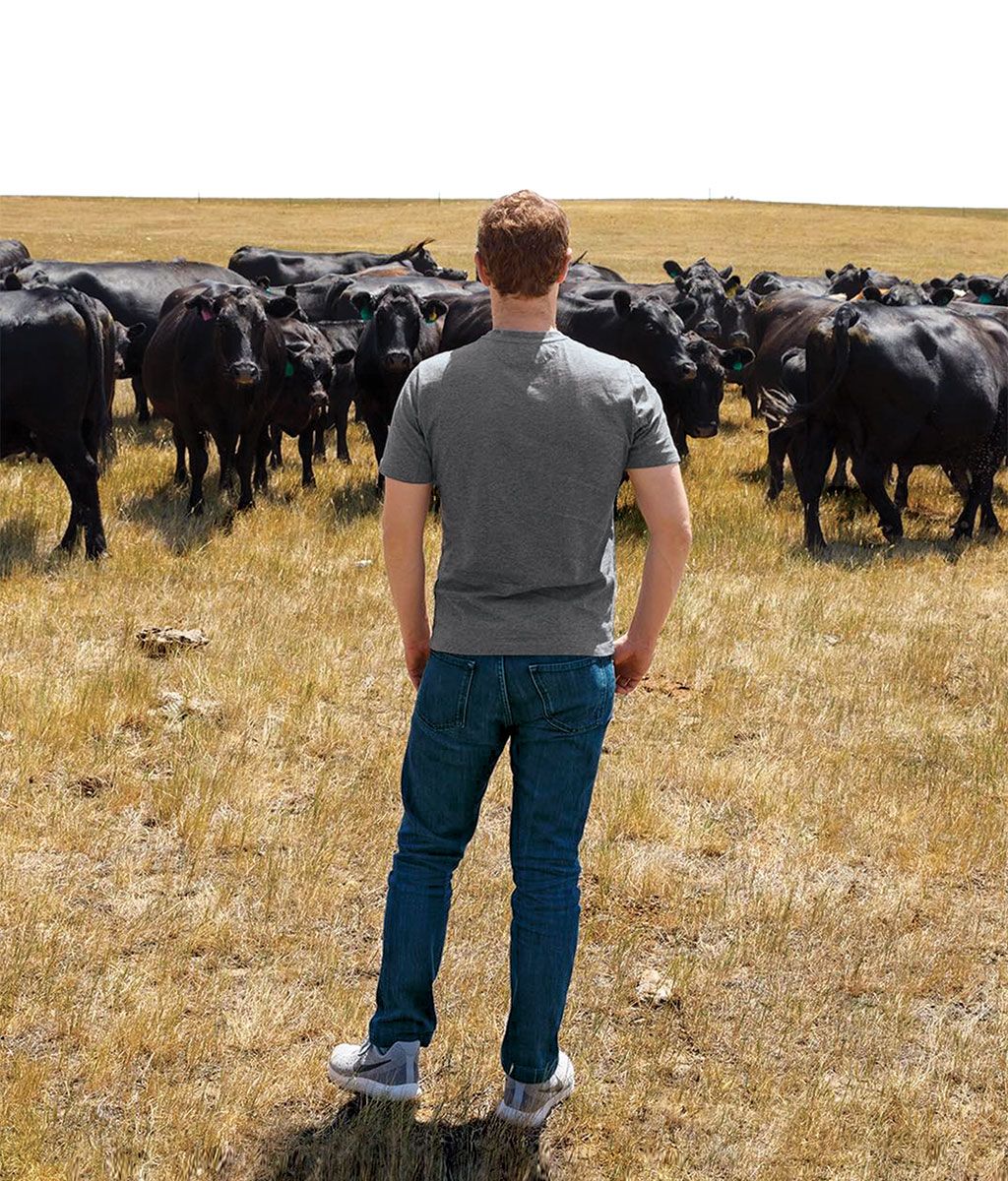

This year, Zuckerberg’s challenge has been to meet people in all the states of the U.S. that he hadn’t yet visited. His first stop, in January, was Texas; since then, he’s been to 24 other states. Zuckerberg has adamantly denied that the trips are a trial run for the campaign trail, and, having spoken with many of the people he’s met with over the course of his journeys—not to mention stern Facebook publicists — I tend to believe him. He limits his tour activity to interactions in private groups or unannounced visits — no speeches, no barnstorms, no baby-kissing. He’s issued no policy prescriptions and inserted himself into political debates rarely and in limited ways. And yet, the road trip sure looks like a campaign — or at least the sort of “listening tour” that politicians sometimes stage to convince voters, before even announcing, that their hearts are in the right place.

To some extent, of course, the media curiosity is his own fault. (After all, he did choose to be professionally photographed while eating fried food and staring intently at machinery.) But it’s hard for me not to think that the incessant speculation is a function of our own incomplete view of Facebook. The Zuckerberg-for-president interpretation of his project understands Facebook as a large, well-known company, from which a top executive might reasonably launch a political career within the recognizable political framework of the U.S. electoral process.

But if Facebook is bigger, newer, and weirder than a mere company, surely his trip is bigger, newer, and weirder than a mere presidential run. Maybe he’s doing research and development, reverse-engineering social bonds to understand how Facebook might better facilitate them. Maybe Facebook is a church and Zuckerberg is offering his benedictions. Maybe Facebook is a state within a state and Zuckerberg is inspecting its boundaries. Maybe Facebook is an emerging political community and Zuckerberg is cultivating his constituents. Maybe Facebook is a surveillance state and Zuckerberg a dictator undertaking a propaganda tour. Maybe Facebook is a dual power — a network overlaid across the U.S., parallel to and in competition with the government to fulfill civic functions — and Zuckerberg is securing his command. Maybe Facebook is border control between the analog and the digital and Zuckerberg is inspecting one side for holes. Maybe Facebook is a fleet of alien spaceships that have colonized the globe and Zuckerberg is the viceroy trying to win over his new subjects.

Or maybe it’s as simple as this: If you run a business and want to improve it, you need to spend time talking to your customers. If you’ve created a hybrid state–church–railroad–mall–alien colony and want to understand, or expand, it, you need to spend time with your hybrid citizen-believer-passenger-customer-subjects.

Zuckerberg’s trip was the most covered component of what seemed to be a broad self-examination on the part of the company that began soon after the election, as columns and articles about the scourge of “fake news” castigated the site for its inaction in the face of this tide of misinformation. Zuckerberg himself was initially resistant to an interpretation of 2016 that placed undue — or, really, much of any — blame on Facebook. “Personally, I think the idea that fake news on Facebook, which is a very small amount of the content, influenced the election in any way,” Zuckerberg told a crowd at a conference two days after the election, “is a pretty crazy idea.” In a status update a few days later, he explained why Facebook wouldn’t undertake drastic efforts to address misinformation on its platform: “This is an area where I believe we must proceed very carefully though. Identifying the ‘truth’ is complicated.” Facebook had traditionally, and sometimes controversially, declined to litigate the “truth” of claims posted to its platform. It preferred to see itself as a liberal institution in the classical sense, one that allowed free debate and discussion, provided that no one posted a photo that included a nipple.

This pose of thoughtful deliberation and liberality did not satisfy complaints. Facebook was unquestionably important to the election: Between March 23, 2015, when Ted Cruz announced his candidacy, and November 2016, 128 million people in America created nearly 10 billion Facebook posts, shares, likes, and comments about the election. (For scale, 137 million people voted last year.) But what had been presented as a democratic town hall was revealed to be a densely interwoven collection of parallel media ecosystems and political infrastructures outside the control of mainstream media outlets and major political parties and moving like a wrecking ball through both.

Opportunistic hucksters and unhinged true believers sold bizarre conspiracy theories, the former for the purpose of driving traffic to their advertising-festooned websites and the latter out of some mixture of cynicism and zealotry. Hyperpartisan sites like TruthFeed and Infowars now made up what Yochai Benkler of Harvard’s Berkman Klein Center for Internet and Society called a right-wing social-media “attention backbone,” through which conspiracy-mongering and disinformation traveled up to legitimating sources and with which extreme actors could set the parameters of political conversation, as Breitbart did with immigration. There was no easy way to moderate or counter this without abjuring democratic values. As a recent study from Benkler and his colleagues on social media’s role in the election puts it, “Our observations suggest that fixing the American public sphere may be much harder than we would like.”

It wasn’t just a panicking media Establishment that wasn’t buying Facebook’s neutral stance but increasingly Facebook employees themselves. In late November, BuzzFeed reported on the existence of a secret Facebook “task force” that had assembled, without managerial oversight, to deal with the problem of misinformation. That BuzzFeed was able to learn about the task force was as noteworthy as its existence: Dissent is rare at Facebook, and openly critical leaks like that are almost unheard of. It had become clear that the company could no longer fall back on the pieties of neutral platformism. Zuckerberg soon issued a new status update, outlining steps Facebook would take to address the problem of misinformation, and a month later, the company released the first in a series of updates to its platform aimed at heading it off. “Facebook is a new kind of platform. It’s not a traditional technology company. It’s not a traditional media company,” he said in a video chat with Facebook’s COO, Sheryl Sandberg. “We feel responsible for how it’s used.”

And then, in January, he launched his personal challenge. In his announcement, he referred obliquely to a “tumultuous” 2016; it was the first time he seemed to indicate that he had been personally shaken by the election, and he offered his own analysis of the global political moment. “For decades, technology and globalization have made us more productive and connected,” he wrote. “This has created many benefits, but for a lot of people it has also made life more challenging. This has contributed to a greater sense of division than I have felt in my lifetime.”

It was a remarkable thing for the CEO of Facebook to admit: Zuckerberg had spent years touting Facebook’s ultimate goal as making “the world more open and connected,” as he wrote in a letter to investors in advance of the company’s 2012 IPO. Now he was suggesting that the “more open and connected” world that Facebook facilitated had turned out to be a stranger and more perilous one.

In his January post, Zuckerberg was still disinclined to place specific blame on Facebook, but he could certainly see the wreckage both to the liberal political order and to his company’s brand. His travel project would, if nothing else, put a human face on a worryingly large and powerful force that had entered Americans’ lives. It would also provide Zuckerberg with data about how Facebook could best make use of its power.

On January 16, high-school students from the Talented and Gifted Magnet School in Dallas were building a community garden as part of a Martin Luther King Jr. Day celebration when Zuckerberg made his first stop. The man who’d changed the internet, and in turn the world, had come to Texas to help plant a garden. (Well, technically he was in Dallas to testify in a lawsuit against Facebook’s VR subsidiary, Oculus. But you don’t become a billionaire by letting a good court appearance go to waste.) “We’re all like, ‘But why Mark Zuckerberg? It’s MLK Day,’ ” one student later joked. But he stayed and pitched in for three hours. “He took his glove off and reached out to me and said, ‘Hey, I’m Mark,’ as if I didn’t know. He was extremely casual and humble, and I really respected that,” another student said.

He also went to the rodeo — his first — with Fort Worth mayor Betsy Price, who gave him a cowboy hat — also his first — and met with Dallas police. In a status update he posted the evening of his departure, sounding charmingly like a colonial sociologist, he attempted in vain to characterize the social relations he had observed: “In many ways, I still don’t have a clear sense of Texas. This state is complex, and everyone has a lot of layers — as Americans, as Texans, as members of a local community, and even just as individuals.” In the comments, users lined up to pitch Zuckerberg on visiting their home regions.

If Zuckerberg left with a lack of clarity, he must have also left inspired, because a month later he published a long essay called “Building Global Community” on his Facebook page. Clocking in at nearly 6,000 words, the post was the fullest expression of Zuckerberg’s understanding of the current political situation and the clearest articulation of what he imagined Facebook’s purpose should now be.

Like The Communist Manifesto, “Building Global Community” opens by offering a theory of history, in this case “the story of how we’ve learned to come together in ever greater numbers — from tribes to cities to nations.” This ever-expanding scale of human interaction continues to evolve, and “today,” Zuckerberg tells us, “we are close to taking our next step.” Zuckerberg isn’t gauche enough to say that Facebook is the “next step” that should follow the Westphalian order. Rather, he writes, “progress” demands that we form a “global community.” And, wow, isn’t it funny — there happens to be a “global community” right here, in Facebook.

Facebook had never before had a charter on the order of Zuckerberg’s declaration, and in its wake, the company “became more intentional” in its support of communities, VP of product partnerships Ime Archibong told me. Mere connection was no longer sufficient; the character of those connections now mattered as well. Coinciding with his manifesto, Facebook began courting the heads of its most “highly engaged” user groups in the same way it does advertisers and app developers, bringing them in for monthly meetings with top Facebook executives and diverting resources to empower them. In June, five months after publishing his manifesto, which, maybe sensing it had overcommitted him, he now calls a “blob,” Zuckerberg announced that Facebook’s mission would change: It would now officially be to “give people the power to build community and bring the world closer together.”

Kate Losse, an early Facebook employee and Zuckerberg’s former speechwriter, told me she thought it represented a serious transformation of the company’s purpose. “The early days were so neutral that it was almost weird,” she said. The first statement of purpose she could remember was a thing Zuckerberg would say at product meetings: “I just want to create information flow.” Now he was speaking of “collective values for what should and should not be allowed.” “It’s very interesting that the community language is finally being brought in,” Losse said. “ ‘Community’ is, like, a church —it’s a social structure with values.”

Two days after publishing his manifesto, Zuckerberg and his wife, Priscilla Chan, showed up unannounced at a cocktail bar called the Haberdasher in Mobile, Alabama. The bar’s owner offered to run interference with the Saturday-night, pre–Mardi Gras crowd, but Zuckerberg waved her off. “We’re in the people business,” he said. “This is perfectly fine.” They chatted with fellow patrons over drinks — a stout for Zuckerberg and a mocktail for Chan, who would announce her pregnancy two weeks later — and Zuckerberg gamely took a shot of Alabama whiskey with the bar owners (“Anything but tequila,” he pleaded when offered). Around midnight, they left — they had to be up early the next morning for church.

In nearly every state he’s visited, Zuckerberg has attended religious services or met with religious leaders. In Texas, he drank coffee with pastors; in Minnesota, he ate Iftar dinner with Somalian refugees; in Charleston, he ate dinner with the entire cast of a walk-into-a-bar joke: “The reverend, rabbi, police chief, mayors, and heads of local nonprofits.” The next day, he visited Mother Emanuel AME, where white supremacist Dylann Roof killed eight parishioners and the church’s pastor in 2015.

Asked by a Facebook commenter last year if he was an atheist, Zuckerberg replied, “No. I was raised Jewish and then I went through a period where I questioned things, but now I believe religion is very important.” It was a telling way to put it. Publicly, at least, his interest in religion seems to be more sociological than existential. After attending services at Aimwell Baptist Church, in Mobile, he wrote on Facebook about “how the church provides an important social structure for the community.”

This has, generally, been the theme for the trip: How does this whole “community” thing work? And if you’re looking for an example of a powerful and enduring community that supersedes geographical territory, ethnic heritage, or class interest, religion offers a particularly fascinating case study. The Muslim Ummah united Arab tribes and non-Arabs in a universal community of believers. The Catholic Church was both a rival and a complement to state power, providing essential services and legitimizing the governance of kings and emperors, almost entirely through the force of shared values.

What shared values might Facebook enforce? Zuckerberg’s own personal values, such as his admirable commitment to immigration activism, tend to align with what’s good for Facebook. It would be difficult to think of a better real-life representative of the “globalists” bemoaned by Breitbart and other hypernationalist outlets than Zuckerberg, but his platform has also been those publications’ greatest asset in distributing their message. Zuckerberg’s commitment to liberalism — and to not alienating wide swaths of his user base — is deep enough that when Facebook was accused of “suppressing” conservative news, he met in person with conservative media figures to assure them Facebook was committed to giving them a voice.

Which may explain why in “Building Global Community,” Zuckerberg hesitates when he tries to lay out a foundational value system for the community he’s hoping to build. “The guiding principles,” he writes, “are that the Community Standards should reflect the cultural norms of our community, that each person should see as little objectionable content as possible, and each person should be able to share what they want while being told they cannot share something as little as possible.” That is: The guiding principles should be whatever encourages people to post more. Facebook’s actual value system seems less positive than recursive. Facebook is good because it creates community; community is good because it enables Facebook. The values of Facebook are Facebook.

In late September, Zuckerberg apologized for being initially “dismissive” about the problem of misinformation but insisted Facebook’s “broader impact” on politics was more important. He’s probably right, but I’m not sure he should want to be. What happens to politics when what he calls our “social infrastructure” is refashioned by Facebook? The last election gives us a hint. In February 2016, the media theorist Clay Shirky wrote about Facebook’s effect: “Reaching and persuading even a fraction of the electorate used to be so daunting that only two national orgs” — the two major national political parties — “could do it. Now dozens can.” It used to be if you wanted to reach hundreds of millions of voters on the right, you needed to go through the GOP Establishment. But in 2016, the number of registered Republicans was a fraction of the number of daily American Facebook users, and the cost of reaching them directly was negligible. Trump was able to create a political coalition of disaffected Democrats and rabid right-wing Republicans because the parallel civic infrastructure of social media — and Facebook in particular — meant he had no obligation to Republican orthodoxy.

Or take the reorganized civic infrastructure of political advertising, suddenly brought to light by the announcement that Russian-government-linked accounts and pages had purchased $100,000 worth of ads, reportedly highlighting divisive issues and promoting spoiler candidates like Jill Stein. What effect that money might have had on last year’s election remains totally unclear. The amount spent, and the number of ads sold (“Roughly 3,000”), could indicate a potential audience anywhere from a few hundred thousand to scores of millions. The best-case scenario is that it was a largely frivolous experiment — a way for the infamous Kremlin-connected “troll farm,” the Internet Research Agency, to quietly test the effects of paid placement. The nightmare possibility is that the money was spent strategically in an effort to selectively target swing voters with specific interests in important electoral districts — white working-class Obama voters in Michigan who’d joined anti-immigrant Facebook groups, say — pushing divisive issues that encouraged or discouraged certain voting patterns.

Few people know how the money was used, or where, because Facebook has declined so far to identify the “inauthentic accounts” or Facebook pages or to say whom they targeted. Until Zuckerberg’s September announcement, the company had insisted that sharing further data with Congress, or the public, could violate U.S. privacy laws. (Facebook did, after reportedly receiving a search warrant, share the data with federal investigators looking into collusion between the Trump campaign and the Russian government.)

The policy changes announced by Zuckerberg in September represent an effort at self-regulation — Facebook’s way of saying “Trust us, we can handle ourselves.” But this isn’t a particularly appealing pitch. Facebook has been wrong, often: It spent most of the year insisting that it had sold no political ads to Russian actors. Twice in the past year, it’s admitted misreporting metrics to advertisers. Earlier in September, ProPublica discovered that it was possible to purchase ads targeted at self-described “Jew-haters.” Maybe more important, it’s not clear why we’d imagine that Facebook’s interests are the same as the U.S. government’s.

This was what felt so unnerving about Zuckerberg’s September announcement. As with all things Facebook, it opened itself up to multiple interpretations, depending on the angle from which you caught it: Rotate one way and it’s an admirable and much-needed statement of commitment and responsibility from a powerful but ultimately positive corporation. Rotate another and it’s an assurance to state leaders of Facebook’s continuing commitment to the sovereignty of nation-states, no matter how global its actual network is. (“Now, now, Mr. Prime Minister, we understand your little borders are very important to you.”)

Rotate further and it’s a declaration that Facebook is assuming a level of power at once of the state and beyond it, as a sovereign, self-regulating, suprastate entity within which states themselves operate. Planetary technical systems like Facebook, David Banks, a SUNY Albany professor who studies large technical systems, told me, “don’t want to be in an environment” — natural, legal, political, social — “they want to be the environment.” Facebook, this announcement seemed to imply, was an environment in which democracy takes place; a “natural” force not unlike democracy itself.

It’s not that there are no possible outside checks on Facebook’s power. The problem posed by Russian ads has an easy and direct regulatory fix. “It should be illegal for foreign governments to buy political ads,” said Tim Wu, the Columbia Law School professor and author of The Attention Merchants. “Facebook should be required to screen and disclose what their advertising practices are, how much people are paying, whether people get the same rates.” Congressional Democrats have recently been pushing to regulate online political ads under the FEC.

The size of companies like Facebook has renewed interest in antitrust, though Facebook itself has, so far, avoided the kind of attention and suspicion that Google has garnered, in part because the case is harder to make, particularly in the context of American antitrust law, which (at least in recent history) is designed to protect consumers rather than competition. Moreover, for antitrust action to have a meaningful effect, it would need to present an actual threat to Facebook — and with its market capitalization of $500 billion, even fines in the tens of billions are minor headaches. No proposals have been floated to break up the company, no doubt in part because we still have trouble sizing it up.

Wu compared Facebook to NBC and CBS and ABC in the 1950s, whose status as the sole TV networks meant they commanded nightly audiences in the tens of millions. But those networks operated under a strict regulatory environment from the get-go. Facebook has essentially gotten away with expanding into every corner of our lives without government interference by claiming to be a mere middleman for information passed between others. “Facebook has the same kind of attentional power, but there is not a sense of responsibility,” he said. “No constraints. No regulation. No oversight. Nothing. A bunch of algorithms, basically, designed to give people what they want to hear.”

Which is really the government’s larger problem. From one angle, the Facebook hypercube terrifies me; from another, it’s a tool with which I have a tremendous and affectionate intimate bond. I have 13 years of memories stored on Facebook; the first photo ever taken of me and my partner together is there, somewhere deep in an album posted by someone I haven’t talked to in years. It gives me what I want, both in the hamster-wheel–food-pellet sense, and in a deeper and more meaningful one.

And if we come to feel that a life structured by Facebook is miserable and regressive? “My answer there,” Banks said, “is storm the data centers.” He gives the example of the financier Charles Tyson Yerkes, who in 1897 attempted to secure his already extensive control of Chicago’s streetcar lines by bribing lawmakers to give him a 50-year franchise. A middle-class mob carrying nooses arrived at City Hall and, hollering “Hang him” at corrupt aldermen, ultimately ran Yerkes out of town. “Advocating for that sort of violent overthrow isn’t necessary,” Banks admitted. “But you do have to start learning how to make demands of a large system, which I think is a practice largely falling out of use.”

It tends to get forgotten, but Facebook briefly ran itself in part as a democracy: Between 2009 and 2012, users were given the opportunity to vote on changes to the site’s policy. But voter participation was minuscule, and Facebook felt the scheme “incentivized the quantity of comments over their quality.” In December 2012, that mechanism was abandoned “in favor of a system that leads to more meaningful feedback and engagement.” Facebook had grown too big, and its users too complacent, for democracy.

Listen to this story and more features from New York and other magazines: Download the Audm app for your iPhone.

*This article appears in the October 2, 2017, issue of New York Magazine.