Traditionally, congressional hearings that prominently feature the internet — or technology in general — have led to some of our national legislature’s most embarrassingly clueless moments. (Remember when Alaska senator Ted Stevens compared the internet to a “series of tubes”?) But this week, in three congressional hearings held over the course of two days, representatives from Facebook, Google, and Twitter faced a barrage of often very sharp of questions from lawmakers over how their platforms were used and abused throughout the electoral process. It’s almost — almost — enough to make one optimistic for an appropriate and adequate legislative response: Congress is so close to getting it.

“It,” in this case, being both the scale and the nature of the threat posed by megaplatforms like Facebook and Google, not to mention those companies’ inability or unwillingness to self-regulate. While ads purchased by fake, Russian-linked accounts were the pretext, and often the subject, of the hearings, it was clear in the questioning that legislators understand that immense, centralized search and social media like Google and Facebook warp the political process in ways we’re still beginning to understand — and that neither company can be trusted to fix this on its own.

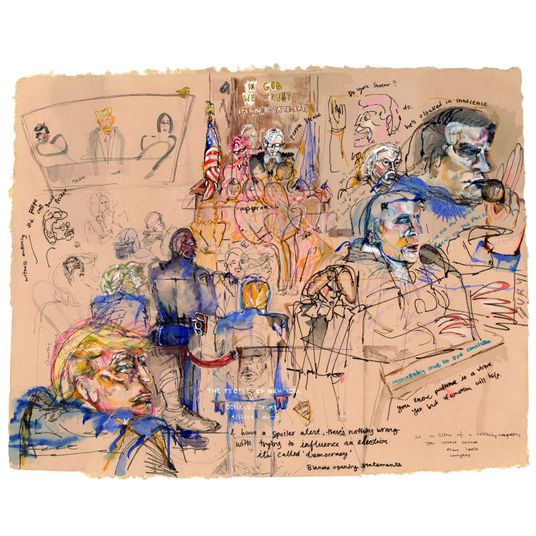

So what can be done? Over the course of this week, senators and representatives from both parties seemed to inch closer and closer to figuring that out. To start, they asked about the ads, and there were some pointed questions about those ads. Senator Al Franken effectively called bullshit on Facebook’s carelessness: “How could Facebook, which prides itself on being able to process billions of data points and instantly transform them into personal connections for its users, somehow not make the connection that electoral ads paid for in rubles were coming from Russia?” The flip side of these companies’ much-hyped advanced machine learning and artificial intelligence is that when these systems fail, they fail hard. Yesterday, California senator Kamala Harris asked each of the three companies how much revenue they earned from ads that ran alongside posts made by Russian sock puppets. None of the three tech reps could say.

The conversation broadened at points though to talk about the more concerning issue of how those sock puppets used Facebook’s free services to sow discord. The vast majority of posts attributed to Russia did not directly reference the election, but instead focused on divisive issues that the election centered around: immigration, gun control, LGBT rights. The problem with these posts, as Facebook general counsel Colin Stretch put it, was authenticity: it was not that paid Russian trolls were swarming online debate with “vile” (as he put it) far-right stances, it was that they were doing so under the guise of being Americans. North Carolina senator Richard Burr highlighted a situation in which right- and left-wing people were manipulated to counterprotest each other in Texas. Both sides were driven by Facebook groups attributed to Russia.

Vermont senator Patrick Leahy came sooo close to articulating the terrifying underlying issue when he put up a big board of political content taken from Facebook the day of the hearing. “These strongly resemble pages you’ve already linked to Russia. … Can you tell me that none of these pages were created by Russia-linked organizations? It’s very similar.” He’s not wrong! It’s not just that Facebook had trouble distinguishing between Russian and American accounts — it’s that users can’t either.

Can the companies moderate this content effectively themselves? Almost certainly not! In maybe the week’s most blistering testimony, Louisiana senator Joe Kennedy said point-blank that Facebook couldn’t possibly mind its entire store of advertisers. “I’m trying to get us down from la-la land here. The truth of the matter is you have 5 million advertisers that change every month, every minute, probably every second. You don’t have the ability to know who every one of those advertisers is, do you? Today, right now. Not your commitment, I’m asking about your ability.” If it can’t verify the origin of 5 million advertisers, the idea of verifying the authenticity of 2 billion users is laughable.

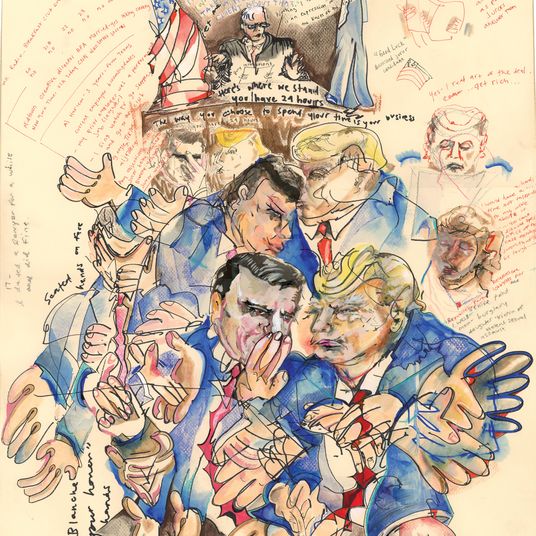

What are companies like Twitter and Facebook to do about their impostor problem? No option seems tenable. Twitter general counsel Sean Edgett tried to articulate why the company doesn’t require users to verify their identities, but stumbled (sidenote: these companies need to get much better at articulating why anonymity online is important and vital). Facebook’s terms of service require users to go by their actual names, but it has no mechanisms in place to actually verify compliance. Adding such oversight would be costly and invasive. Congress could pass legislation, but then they would be getting into the thorny legal area of regulating speech on private services, which is a third rail if I’ve ever seen one.

Illinois senator Dick Durbin asked on Tuesday, “How are you going to sort this out consistent with the basic values of this country when it comes to freedom of expression?” None of the companies had a good answer.

That’s because, as Congress is soooo close to realizing, there is no good answer. As Matt Stoller from anti-monopoly think tank Open Markets observed watching the proceedings, “My sense is that there are only two ways to deal with dominant platforms and free speech. Break ’em up, or accept private censorship.” None of these companies want censorship, because their businesses rely on users giving them content and data for free, and in tandem the tech companies rely on the low overhead that minimal content moderation allows.

Yesterday, Virginia senator Mark Warner excoriated the company’s reps: “We’ve raised these claims since the beginning of the year, and the leaders of your companies blew us off. Your earliest presentations show a lack of commitment and a lack of resources.” Sure, maybe the companies were just short-sighted, but that’s doubtful. Their business models necessitate their being as accepting of user-generated content as possible, even if they find it detestable. (Fun detail: While Facebook’s general counsel was testifying before Congress about how much the company respects the American electorate, Mark Zuckerberg was courting Chinese president Xi Jinping and preparing to announced billions of dollars in revenue this quarter.) But in congressional hearings about the destabilization of American democracy, none of the companies could come right out and say that taking on the onus of censorship was a bad business proposition. The implication was still there, however, looming over everything: These companies are not going to solve this problem themselves.

Which leaves us with the other option Stoller mentioned: breaking up the companies, whether that’s through direct antitrust prosecution or legislation that allows more competition in the marketplace. Members of Congress spent this week attacking web-industry Goliaths from every angle, and they spent a lot of time going over the problem and the flaws of various solutions. They noted that the companies are enormous, but I don’t recall anyone saying that the companies are too big — an important distinction. Congress is sooooooo close to figuring out.