Intelligencer staffers Brian Feldman, Benjamin Hart, and Max Read discuss how dangerous manipulated videos really are.

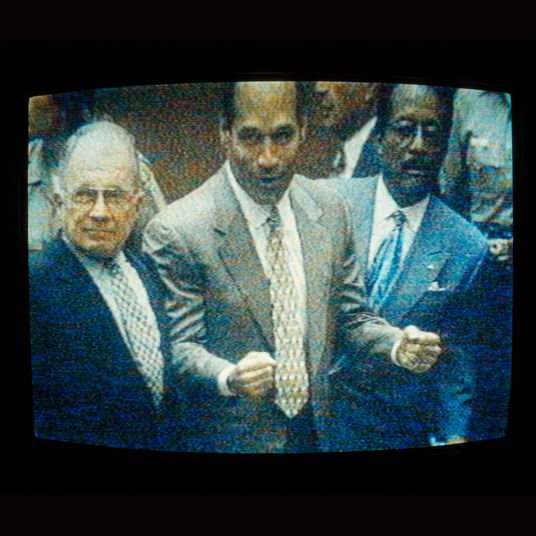

Ben: This week has seen another round of panic about “deepfakes” — the AI-assisted videos that employ manipulated audio and video to make it look as though a familiar face said or did something they didn’t say or do. First, someone created a (not very convincing) clip of “Mark Zuckerberg” boasting about his unfettered power, to see if Facebook would take it down, after the social media giant refused to remove an edited video of a “drunk” Nancy Pelosi a couple weeks ago. Another video, this one of Bill Hader subtly morphing into Arnold Schwarzenegger, also made the rounds on social media. The House held a hearing on the specter of deepfakes poisoning the political discourse, and there have been a lot of headlines implying that the information apocalypse is nigh. I have always been a little skeptical about the magnitude of this problem. Am I right to be, or am I kidding myself?

Max: I think it’s telling that you see way more headlines about the threat of “deepfakes” than you do actual deepfakes, and that the deepfakes you do see tend to be seen in the context of articles fearmongering about them.

Ben: Exactly! With the caveat that the technology is still in its relative infancy, and these things will get more and more convincing.

Brian: I am skeptical of the threat of deepfakes because people get tricked by things that are a lot less sophisticated. Like, screenshots of fake article headlines are more dangerous than deepfakes.

Max: Deepfakes will surely get more convincing, but we’ve lived with extremely convincing photographic manipulation for decades now. And like Brian says, you don’t even need to do anything particularly sophisticated to fool people.

Brian: I would LOVE if deepfakes were the thing to worry about.

Ben: It seems like we’re in agreement on the basic threat. But to play devil’s advocate, wouldn’t some people be more convinced, or at least on a deeper level, by actual video of a politician, say, declaring war on a foreign country than they would be by a fake screenshot?

Max: I think we need to think about the context in which people encounter videos (or photos, or anything).

Brian: I think that’s putting a lot of faith in people to go straight to the primary source for information.

Max: Are they stumbling across a video in some social feed? Are they seeing it on their TV? Is it being shared really widely, by figures of authority?

Brian: The real threat of deepfakes, to me, is they convince one credible person who repeats the lie.

Max: By the time most people see an actual video, it will already have been discussed and dissected and weighed in on, and they’re likely to encounter all that discussion at the same time as they encounter the video. I think Feldman’s totally right, that the greater threat is that someone with power or influence sees a deepfake in a secretive or private context, rather than causing mass disinformation.

Brian: I’m now imagining a horrible reality in which politicians claim bad stuff that is actually caught on tape is a deepfake, not the other way around.

Max: Like let’s say we had a really stupid president, with some incredibly evil advisers who wanted to convince him to do something …

Brian: I’m visualizing …

Max: I actually think Feldman’s “horrible reality” is a way more likely scenario.

I wrote about this a little bit in a column last year — for me the threat that deepfakes represent isn’t the injection of fake bullshit into real news, it’s the erosion of trust in anything at all.

Ben: As the protagonist in the critically acclaimed series Chernobyl said, taking a page out of Hannah Arendt: “What is the cost of lies? It is not that we’ll mistake them for the truth. The real danger is that if we hear enough lies, then we no longer recognize the truth at all.”

Brian: Our version of Chernobyl is a clip of Trump doing a 720 flip on his wakeboard posted by “PepeDeplorable” with the caption “real?????”

Ben: That’s like the Reactor 4 explosion times a thousand.

Brian: Deepfakes are bad, but yeah, I don’t think they pose a significantly greater threat than anything else.

Ben: So why are people freaking out about them so much? Do we just always need some new technology to freak out about?

Brian: I think people desperately want to believe that mass manipulation requires some grand technical threat. It makes fighting against it feel more noble than, like, yelling “Have decency, sir!” at people on Twitter.

Max: Yeah, I think that’s right. “We” (for some value of “we”) really like to think that people “believe” stuff, or engage in politics in particular ways, based on rationally assessing the evidence available to them. This strikes me as a fantasy, basically.

But it’s a really important fantasy for a deliberative, liberal democratic system of government. I think panic about deepfakes masks a deeper panic about the possibility that there will not be some eventual consensus based on an empirical account of reality.

Ben: It seems like we’re more than halfway there already. I wonder if all this would be causing such a stir if the technology had arrived ten years ago. I think it’s just about the broader context right now, with people already feeling like the truth has already ceded so much ground.

Max: I think that’s absolutely right. Though I’d argue that the issue as it stands isn’t that “truth” has ceded ground but that we’re at particularly low levels of civic and societal trust. And the threat of deepfakes, such as it is, is to further drive down those levels of trust.

Ben: Obviously, “the truth” is a slippery concept. But I was referring to the empirical account of reality you mentioned.

Brian: One thing about deepfakes and video fakery in general is that it has become a reflex for everyone across the board, when confronted with the fact that something is fake, to reframe it as “feeling true. It’s the “satire/social experiment” excuse but … everywhere.

Ben: I haven’t seen that excuse much — but it feels true.

Max: I feel like we should mention that there’s another kind of threat posed by deepfakes, which is the thing that the tech was originally designed for, e.g. putting women’s faces on other women’s bodies. In this case the threat isn’t even that, like, you think that’s a “real” sex tape (or whatever) of the victim, but just that the video is used as way to demean and belittle and harass the person. I’m way more worried about that than I am about the possibility that a deepfake might misinform someone.

Brian: A deepfake won’t get us into WWIII, but it will get someone swatted. That’s my prediction.

Ben: Not the worst-case scenario, but not a great-case scenario, either.