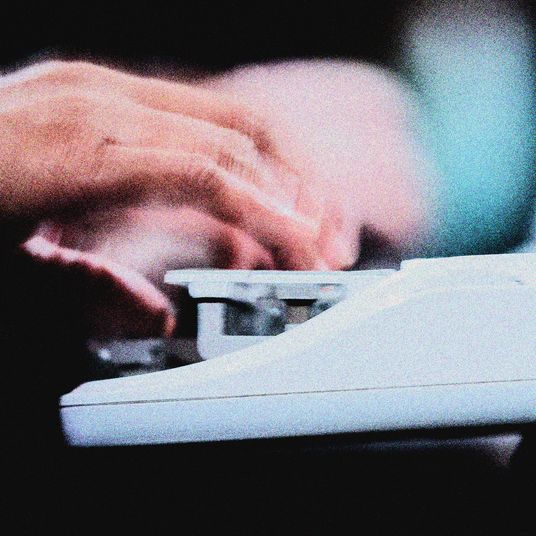

Society is, according to an old aphorism, always nine meals away from anarchy. For the tech industry, the threshold of chaos lies, apparently, at around five hours of Amazon downtime. On Tuesday, the US-East 1 data-center region of Amazon Web Services — the vast but largely hidden web-hosting arm of the internet retail giant — experienced extended downtime for a large number of websites and services. The cause? A typo entered by an Amazon tech.

At the heart of this breakdown was S3 (Simple Storage Service), one of the most popular of AWS’s many products (many of the websites you visit on a daily basis likely use S3 for things like images, HTML files, and other “static” media). S3’s ubiquity is due, in part, to the simplicity and necessity of the service its name describes. It provides easy-to-configure, flexible storage for all kinds of “objects” (i.e., files) in “buckets” (which is, delightfully, a technical term for a kind of unit of storage).

Put in Arrested Development terms, file storage is basically the banana stand of the internet — that is, there’s always money in it — and as a result, since the service’s launch in 2007, S3’s use has grown exponentially. The last time AWS offered a count of just how many objects lived in S3 buckets was in 2012, when the company surpassed the 1 trillion mark. In the past five years, that ratio of approximately three files on S3 for every star in the galaxy has probably only increased. And it’s likely that a significant amount of those objects are clustered in the US-East region in Northern Virginia, one of AWS’s oldest data-center regions and, for various reasons, a crucial choke point in the network infrastructure landscape.

While the outage was bad enough to cause disruptions across a huge range of products and services, it wasn’t necessarily as bad as catastrophic headlines about Amazon “breaking the internet” might suggest. Some of the breakages were more annoyances than cataclysmic failure, related to things like images not loading or trouble uploading attachments (as reported on Slack, Signal, and several news websites including the Verge). Other effects were more pronounced, but still focused more on tech itself, such as issues with tools like GitHub, Bitbucket, and Travis CI hindering developer productivity. And the outage not only broke some websites, but it broke other AWS products partially reliant on S3 — AWS was even temporarily unable to actually update its own product-status dashboard because the green and red icons indicating availability were hosted in an S3 bucket.

But so far, there’s no sign that companies are rapidly preparing to abandon AWS in light of the outage — if anything, they’re likely to increase their redundancy across data-center regions instead. While the Great Bucket Incident of 2017 may have been more temporary inconvenience than life-or-death situation, like the various outages that have come before it, this one serves as a semiannual reminder of just how much AWS has shaped and continues to shape the internet — and how many parts of both day-to-day internet use and everyday life are increasingly engulfed in the ubiquity of the cloud. Cloud computing, of which AWS has long been at the leading edge, has facilitated some major advances in consumer technology by making increasingly complex computation a problem that happens somewhere else, rather than on the limited hardware of, say, a smartphone or an Amazon Echo. These advances are sort of self-perpetuating: because the complex computation behind, for example, machine learning can live in a data center rather than a device, it’s easier to incorporate machine learning or other complex computation into more and more products without having to staple on more powerful hardware. The more objects and devices depend on machine learning, the greater the need for data centers — and the greater the consequences when those data centers go down and suddenly your data-driven lighting system doesn’t seem all that smart.

While reports from the S3 outage of disruption of some connected light bulbs, and a slightly more obstinate Alexa, don’t sound particularly insidious, it’s easy to imagine scenarios where the push for more and more cloud-connected services and unexpected platform vulnerabilities produces more dire situations — hospitals losing access to data in crucial moments, smart traffic systems going down, or footage from police-worn body cameras rendered unavailable all at once.

The answer to this dilemma, according to the companies fighting to be Top Stratocumulus, is mostly more redundancy for their own systems — not a more diverse array of systems (or, say, the possibility that not every object in the world needs an IP address). When Jeff Bezos previously compared AWS to the development of the electric power grid, he wasn’t really evoking the democratic potential of rural electrification, so much as establishing himself, and AWS, as a new locus of power shaping and facilitating large aspects of networked life.

(Ironically, in recent years AWS has had to start acting more like an actual power company — in 2015, AWS began investing in and developing wind and solar projects throughout the United States to support the growing energy needs of its data centers, and to meet a renewable-energy goal the company announced in November 2014, following years of criticism from Greenpeace over AWS’s use of coal-powered electricity. Private renewable-power grids are, in addition to being a good PR move, also an effective way of improving data-center efficiency and, well, making tech companies look even more like sovereigns. It sort of seems like a natural final evolution of the cloud — having achieved total self-sufficiency while rendering internet users completely dependent on their services, data centers make appealing fortification sites for a cyberpunk civil war between corporate overlords and a kleptocratic fascist regime. That is, maybe, a future in which we all lose unless we’re Neal Stephenson.)

To some extent, the insidious shift toward greater and greater infrastructural centralization (and, by extension, greater vulnerability) via cloud services is not a new narrative. It’s one that has been the central tension of the internet for decades — whether it’s the walled gardens of AOL and Facebook against the open web or cloud services against self-hosting or local storage, convenient and centralized private platforms have long sought to undermine the technical (and rhetorical) ideal of the internet as a resilient, equitably distributed system.

Which was also a tedious system, and whether we ever had that version of the internet, or whether it was simply an nice idea to strive toward, is debatable, but I don’t think that makes it any less of a worthwhile ideal to pursue. A few hours of downtime in northern Virginia unfortunately isn’t quite enough to concretize the technical risks of infrastructural centralization at scale, but in an ideal world, those hours of downtime might offer users and developers some space to reflect on the political risks.