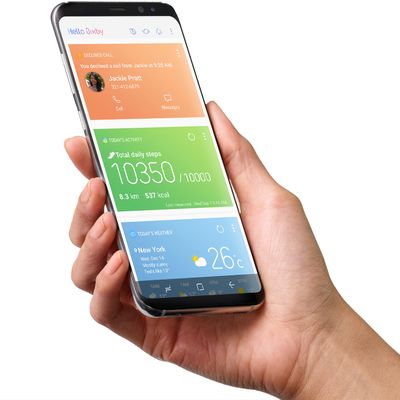

It’s conventional wisdom within tech that voice interaction — that is, talking to your phone — is the future of how we interact with our gadgets, particularly voice interaction through a “personal assistant” like Google, Siri, Alexa, or Cortana. Samsung desperately wanted to play catch-up, and introduced its own AI agent, Bixby, alongside this year’s flagship phone, the Samsung Galaxy S8. The only problem? Bixby can’t understand you. Or, Bixby can understand you if you speak Korean. But its English-language capabilities, like an MTA project gone bad, just keep getting pushed further and further back.

The field of voice recognition and conversational AI took a huge leap forward about five years ago, as the field of machine learning (specifically, the use of recombinant neural networks) allowed speech-recognition accuracy to leap forward. In 2013, Google’s voice-recognition accuracy hovered around 75 percent, per Kleiner Perkins’s Mary Meeker. Today, Google’s voice recognition is at 95 percent. It did this because Google had tremendous amount of data to train its voice-recognition systems with. (Meeker also says about 20 percent of queries were made by voice, showing why Samsung may be anxious to get Bixby up and running.)

Both Google and Amazon allow their assistants to train against a user’s own voice, learning a particular person’s quirks and regional variations in speech. Even Apple, which has significantly lagged behind the competition, has improved its voice recognition (even if Siri itself can be frustratingly dense about what to do with those voice queries). But even these voice assistants require you to speak clearly with significant pauses between words and clear enunciation. Blur your words together quickly like you do in colloquial speech, and these systems — which have collectively thousands of very, very smart people working on them — can still be thrown for a loop.

Meanwhile, there’s Samsung. A spokesperson for the company, speaking to the Korea Herald, says, “Developing Bixby in other languages is taking more time than we expected mainly because of the lack of the accumulation of big data.” Google, Amazon, and Apple all have vast libraries of speech to fall back on, and Google in particular has its search engine to simulate the appearance of real depth (even if it can be badly led astray).

None of this is to bag on Samsung. The company is the second-largest manufacturer of cell phones in the world, and its Galaxy smartphones were briefly outselling the iPhone in 2016. It’s also an enormous company, of which cell phones are but one of its many going concerns. (Nobody expects Google to turn out washing machines, or Apple to make a vacuum cleaner.) But the table stakes in the world of voice recognition and AI agents are so tremendously high, it’s hard to see how any company — even one as large as Samsung — will be able to break through.

Not that that’s deterring Samsung. It’s reportedly already planning to bring its own Echo competitor to market, code-named the Vega. It’s easy to see this as Samsung’s reach exceeding its grasp — why bring a product to market when you can’t even get your phones to understand English? — but there’s a good reason why Samsung may be forging ahead. Even if it can’t rack up the sales numbers the Echo has seen, it’ll at least get a few more people talking to Samsung — and helping it build up its own store of voice data to train against.