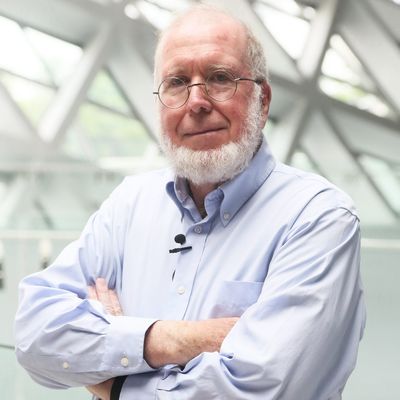

At Wired, Kevin Kelly’s actual job title is “senior maverick,” which is only mostly as preposterous as it sounds. An East Coast–born transplant to Silicon Valley who found himself editing Stewart Brand’s proto-cyberhippie periodicals Whole Earth Catalog and Whole Earth Review, Kelly was the founding executive editor of Wired, giving a certain countercultural patina to a lot of 1990s techno-futurism, and in the years since has become a kind of levelheaded but far-seeing technology guru. His last book, What Technology Wants, was a TED-circuit sensation that also generated some blowback from humanist critics who found it silly to say technology wanted anything at all. His new book, The Inevitable, extends that thesis and lays out 12 particular trajectories along which Kelly sees the near future of technology unfolding — from the end of finishedness (a trend he calls “Becoming”) to the wiring-together of the whole world and all its devices into an entity actually capable of global action (a trend he calls “Beginning”). It’s pretty optimistic!

Maybe the best place to start is with the title of the book — The Inevitable.

There’s no controversy there whatsoever!

It seemed to me to be a kind of response to the chatter about your last book, What Technology Wants.

I don’t think it was a response to the criticism — actually it’s just an extension of the same general thesis, which is that there are directions in technological evolution.

But direction is a bit different than inevitable, right?

I mean inevitable in the soft version of the word, meaning that the particulars are not at all predictable, but that the larger forms, the genres, are dictated by the physics and the chemistry of the actual technology. I’m talking about directions rather than destinies or spots.

Could you give an example of what you mean, maybe from the recent-ish past?

One obvious one is if I were to get in a time machine and go back, say, 40 years, to 1965. I was at a computer show with my dad, in Atlantic City, and there were these big hulking metal cabinets — acres of them. If a guy from the future comes and says, “Okay, so for the next 40 years, the main event is that every year these things are going to get half as small, twice as fast, half as expensive.” That’s a long-term trend — we call it Moore’s Law now. But if people were persuaded by that — one, you could make a lot of money not knowing anything about IBM or Apple. Two, if all of us had believed, we could’ve adjusted our education, our industries, our politics, to anticipate and to maximize the benefits that that knowledge would have yielded.

Do you think we haven’t done that — that we haven’t adjusted sufficiently to the pace of technological change?

In general, after 40 years, I think we have absorbed that one, but there are others that are happening.

Like what?

Take copying. I’m saying that the basic physics and dynamics of the technology, when you have transistors and wires — it’s inherently going to copy things. It’s going to copy things just to transmit things across the internet, which is the world’s largest copying machine. Anything that can possibly be copied — if it touches the internet, it’s going to be copied, indiscriminately. Like a superconductor liquid it’s going to go anywhere. You can’t really stop it because that’s the bias. And yet the music industry — for 20, 30 years — has been trying to stop it. There’s digital copy protection, digital rights management. They sue the customers. They desperately want to stop the copying, but you can’t. And if the music industry had accepted that the basic bias in this machinery is to copy things — if they had accepted that 20 or 30 years ago, they’d be much further along.

But there are some design considerations there, too. People often say that if there had been a micropayment system built into the internet from the start, then copying would be less of a threat to these industries, since there’d be a way to make money on the copies.

Right. There are certain trends that are inevitable — and forms, like the internet. But what kind of internet we have — or what legal regime around the copying — these are not inevitable at all. These are entirely unpredictable and, by the way, up to us. And also by the way, they make a huge difference to us. So while telephony was inevitable — as soon as you have wires and electricity, someone will invent the telephone and wrap the globe — the iPhone isn’t. And while the internet is inevitable — on any planet you go to, any civilizations you find you’re going to find them to have the internet — but Twitter’s not inevitable. So copying is inevitable, and is only going to increase. But what we do with that is not inevitable, at all. In fact we have a huge choice.

In the book you say that code can shape behavior as much as, if not more than —

Law.

Which made me wonder how you see the politics of technology and of Silicon Valley? In the book you say it’s fundamentally collectivist — you call it “digital socialism,” or “socialism without the state.” But at least some leftist readers would probably object to that by pointing to how prominent the profit motive is in Silicon Valley, and how many of the biggest companies are so large and powerful now they’re almost like states.

The first thing I’d say is that I’m mainly interested in politics with a small-p, rather than a capital P.

You mean, basically, you’re not interested in partisan politics.

Exactly. Even the dichotomy of left and right is basically a remnant of the industrial view.

What do you mean by “industrial view”?

The notion that you could roughly divide politics into left and right — there’s socialism on one side and I don’t know what’s on the other side. I mean, Trump, what is that? I don’t even know. But there has been this idea, which I believed for a while, that there is a zero-sum trade-off between social things and the individual — that you could only increase the power of the individual if you decreased the corresponding power of the group. What I’m arguing is that we actually have some technologies that allow us to do both at once — elevate the power of the individual and the power of the group. And where does that fit in?

You tell me!

You mentioned that the companies are in some ways de facto governments or states — and in fact that is another aspect of this. They have this tremendous challenge — how do you work that. What’s best practices? What do we want from these privately-owned platforms that do many things that government did before? We don’t have a consensus, let me put it that way.

We don’t even really have a way of thinking about it.

Is it left or right? I don’t know, I don’t care, I don’t think it really matters. That’s not the question. The question is, we have this whole bunch of new tools and powers. Connecting all the humans on Earth, and all the machines, at the same time, allows us to do things on a scale that exceeds nation-states. We have companies with 1.5 billion active customers all collaborating together — that is unheard of on the planet before. And it’s going to become more common. We’re just at the beginning of doing things collectively, collaboratively, at that planetary global scale. That’s the new power. Take Wikipedia, which is simply impossible in theory — there’s no way it can work. And here it is! So you have to figure out — what’s going on? Politically, where’s Wikipedia in relationship to left or right? Now, it’s all certainly going to be governed by politics — politics are always involved. But we’re kind of heading into a territory where those old names — socialism, other -isms — aren’t really very helpful.

So what terms should we be using? How should we be thinking about it?

First of all, as you know, I don’t address that very much in my book. I feel unqualified. But I will be very naïve and tell you some ideas.

Yeah, please.

I think my picture of the politics in the future is that we will increasingly have to transcend nationalism, which is really a terrible disease in many ways. And by 2025 almost everybody on the planet will have a device. We’ll connect all those devices. And that collective thing, which I call the holos — a combination of all this stuff — is something that will be a global phenomena capable of doing global things, far larger in size than any country. It will be, I think, one of the tools that we try to use to deal with global problems, which range from climate change to immigration to flows of money. We’ll be trying to run the global economy rather than trying to run national economies. Now, my friends on the left think a world government is a bad idea, my friends on the right think it’s a bad idea — nobody I know thinks it’s a good idea. So we have a long way to go.

Does that mean that you see the next generation’s political conflict unfolding on the axis of global versus local, rather than left and right? Or is it that you see political conflict itself disappearing as we move further towards a global political structure?

I absolutely don’t see political conflict receding at all. I think we’ll have several decades of misguided political conflict — misguided in the sense that a lot of the jobs that people are fighting over are jobs that humans should not be doing. A hundred years from now we’ll be embarrassed that a human ever did these jobs. But for at least another decade people will be fighting over them. So this is a very slow process. And political conflict is inherent in the process.

Your question is what it will be about. I think it’s going to be in part about the nature of humans. A lot of things we’ll be wrestling with are things like, Is human nature sacred? A lot of people will be interested in the mutability of humans — AIs, genetic engineering, the convergence, changing who we are, this constant rate of change. There will be a lot of political conflict between those who really want to preserve — or resurrect, or keep in front of them — the sacred nature of the soul and the human that is not to be messed with. I think the abortion debate has a little bit to do with that, but I think we’re going to get way beyond that — I think abortion is just a kindergarten spat compared with where we’re going to go when people start to want to mess with their genes.

In the book you say we’ll be spending the next few decades in an identity crisis as a species.

And to your point, that’s an axis of huge political conflict — how we define ourselves.

Really you can still use the terms progressive and conservative to describe the two camps. It’s just that the thing under debate isn’t state ownership of industry but what “the human” means.

Right, true.

Can we talk a bit about some of the divergent ways that people see the future? There are obviously a lot of technologists who are very optimistic about where things are going, but in pop culture and elsewhere you also see a lot of fear — people who see dystopian possibilities in where things are headed. You hear a lot of talk about the robot apocalypse, for instance, and people are very anxious about the environment in general. In the book, you write a little bit about the appeal of dystopian visions — that, these days, very few people would want to live in the distant future. Why do you think so many people are so scared of the future? And what about these particular dystopian visions has such a grip on us and our culture?

It’s a really good question, and I wish I understood this better. I can only make a couple of observations. I am old enough to have enjoyed a period when the future was very golden, when we did want to live in the future. I don’t know when the transition point was, but it seems like somewhere around the year 2000, when we have the whole Y2K thing.

But something flipped, where the general default version of the future became this dystopia. Which, by the way, Hollywood is very good at, because it’s so cinematic.

You think those visions have had an effect.

I think it’s part of it — but only part. Science-fiction authors and Hollywood writers have been not capable of painting a friendlier future.

Why not?

In part because it is difficult. It is hard to put all these things together into a place where you want to live. What I’ve tried to do with my book is try to describe at least the near future as a place where people would want to live. I don’t know if I succeeded, but that’s what I was trying to do. Because I do think that when we get there we will prefer to be there than back here — that it will be a bit better. I’m not a utopian, I believe in incremental betterness, and I think it will be a little better. But what that looks like is hard because we’ve been bitten by so many things.

What do you mean “bitten”?

We now realize — it’s what I call technoliteracy — that any technology is going to bite us. Whatever its benefits, there’s also a cost. Things are not in any way without their corresponding downsides. I think that’s kind of a new view, a widespread view — yeah, you have this magic thing, but what else is it going to do to me? I mean — side effects! Just look at a magazine these days — the side effects of these drugs being advertised are like pages long. I mean, how can anyone take these things? And part of that is just disclosure — we’re now in full-disclosure mode.

Do you think there were always those downsides, but that we’ve just become more aware of it?

Yes, absolutely. You wouldn’t give polio shots now. They didn’t ask you back then, they just gave it to you. Now you have full disclosure, and you have counseling, and all this other stuff. Which I think is good! I’m just saying it wasn’t present before. And it makes assembling a vision of the future a huge challenge because we all know the downside as well, and you have to incorporate that.

It’s not an issue of things changing so much, just that the changes will be complicated.

The future will be barely better than it is now — which not very cinematic. Most of what the world will look like 25 years from now — it’ll mostly be the same. You’ll look out the window and there’ll still be streets. New York City will look very much like it does right now, except that the cars will be driven by bots.

Do you think people will be happier?

I think you have to define what you mean by happiness. I think what we’ve learned is that happiness is not just a single dimension. There’s contentment, there’s cheerfulness, there’s ease, there’s satisfaction. On a few of those dimensions — as I said, yes, we would prefer to live there. But I also think we’ll be more anxious.

Why do you think that is?

We started off by saying people are scared, and I think we’re not really evolved to change this fast. This is a skill we have to learn. The fact that we live longer, we’re more educated and all this stuff — I think there’s a price for that. And the price may be that we get anxious easier. For example, even though the world is safer, we have this impression that it’s more dangerous. We have these maps of kids roaming — we used to roam ten miles and never tell our parents where we were. And now this fear of where kids can roam is strong, even though the world is inherently safer. Because we’re more anxious.

And you attribute that anxiety to a kind of techno-shock that started with the Industrial Revolution.

Yeah, I think that Alvin Toffler was right about that. When my grandfather was born, he believed that his life would be just like his grandfather’s — and it was. Now, people are born and the world they’re going to die in will be very very different in many ways, certainly psychologically.

How does it affect you?

The fact that we’re perpetual newbies, that we’re always learning things and having to unlearn stuff, and we’ve gotten something done, but we’re not going to use it, we’re going to go on to the next thing — I think that’s ruthless. We can learn how to do it; we have to learn how to do it. And I think we can use technology to help us do that — whether it’s meditation technology, whether it’s drugs, whether it’s other devices. I think we can solve the problems technology produces with more and better technology.

Interesting. What other problems are you thinking of?

I’m not worried about too much, but I’m worried about the fact that we don’t have a global consensus on the rules for cyberwar. In other words, we will have these weapons — it seems like there’s no technology we haven’t weaponized, and so therefore we will weaponize AI, have them make decisions about shooting people. We don’t have really good rules for understanding that. We don’t have really good rules for what’s legitimately civilized to do. Taking down someone’s power grid or bank — is that okay or is that like land mines? What are the lines? And because there’s no lines, because there’s no agreement, some very bad things are going to happen.

How do you see privacy issues unfolding in that context? There was a time when it seemed the future belonged to Facebook, and everybody’s life was going to be public. And then there was a period of pullback when people seemed more protective of their data. Now we’re probably somewhere in between. Where do you think we’re headed?

Privacy is another one of those words that’s an umbrella word that means a lot of different things, and we have to unravel and unbundle it over time. But the thing we normally think of as privacy is very much bound to another axis of transparency, with privacy on one end. But it’s also tied up on this other axis of the personalization and the generic.

What do you mean?

Everybody wants to be treated as an individual, with all the preferences and special preferences, but the only way to do that is to be transparent — to disclose yourself to that. If you want to be completely hidden and obscured, then you have to accept generic treatment. The surprise is that in general people have pushed the lever, the slider, over to “I want personalization, and I’m going to be transparent to do it.” In the book I say that vanity has trumped privacy. Now, I think we should have that choice, but it seems again and again that when we give people choice, they push it to, “Well, I’m going to disclose because I want to be treated as a person.” Now, will we change and move that back when we realize what the consequences of that are? It’s possible, so I think we really should have that slider. But it is surprising to me that, when given the choice, vanity trumps privacy.

How does that calculation change now that we’re no longer dealing with privacy issues exclusively in contrast to state and surveillance power, but in terms of what corporations know about us and what they do with that knowledge?

I don’t think I have a vision, but you’re right that the language that we have been using is left over from the old dichotomy of state versus individual, and should be broadened.

How?

I have a suspicion of the very idea that data can be owned. I don’t find ownership a very good model for data, let me put it that way. I think part of what we have to evolve is some different metaphors, different language, different frameworks, for understanding what’s going on with data.

Like what?

Take my genes. The idea that I own my own genes I find logically absurd, because 99.99 percent of my genes are shared with other people.

So we need reimagining what’s actually happening when we have data that is coming from the Fitbit that I’m wearing — it’s recording what I’m doing, but it’s passing it to all these other agencies, or channels, or nodes, and they’re each doing something along the way with it, and they have some claim to it.

Now, my view is that every party to data — the institutions that created the device that measure the data, the institutions that transmit the data — they all have rights and they all have duties.

Does that mean you see at least a limited duty to share some information about yourself?

I do! Your face — my face. I have total legal rights to that face, but I also have a duty, in some sense, to show my face in public.

But in terms of data, what’s the logic behind the duty to share? Is it to make the collective system more intelligent and responsive?

Exactly, yeah. Take something as simple as medical advances. We can really only make a drug that’s going to help you if we know enough about your biology from the evidence of many others. If we had a human population of one, we’re not going to get very far, because we’ll probably kill you before we discover what works. We need a lot of people before we can advance science.

So we require others, for our own welfare — our own welfare is tied to the group welfare. And what we’ve made with communication technology is a whole new set of tools to make the group smarter, to learn more from the group, to have the group multiply, from two to two million or two billion. That’s elevation. And I’m perfectly willing myself, by the way. I haven’t had my full genome sequenced, but when I do, I will put that in the public domain, because I think there’s this tremendous benefit waiting for us when we share our medical stuff, and our genes and whatnot. It’s very very, very powerful.

There are a lot of people who find it scary.

There’s a lot of fear — kind of a weird fear. Some of it’s legitimate, but not most of it. There are some insurance issues, but I think there are a little bit overblown and can be remedied easily.

It also seems like the issue there might be the system of insurance we have rather than the sharing of information per se.

Right, and I think that can be fixed.

But there are other areas where the collective benefit may be less clear than with health. For instance, what comes to my mind right now is all this stuff brought up by Peter Thiel, Gawker, and Hulk Hogan. I don’t know how much you want to talk about that in detail, but personally it seems to be intuitive that there should be some things that people should have the right to keep private if they so choose. Do you not feel that way?

I think the answer is yes, and I think what those are will be something that shifts over time, by culture, and will be a work in progress. The kind of extreme would be your own private thoughts — something that you didn’t even say, but just thought. I’m taking a science-fiction view here. Should daydreams be public? Should fleeting thoughts be public? I think most people would say no. And then the question is, well, should anything you say be public — anything you said to anybody be public? And there will be lines — lines here and here. I don’t think it’s going to be binary — it could be relative to your position in society. Should anything that Trump says, anywhere — should that be public? Or Obama, the president — should anything the president says, anytime anywhere, be public?

I actually envision an economy where every single transaction would be public, to some extent. A lot of the people who run Bitcoin and Blockchain are sort of these crypto-anarchists, or at least libertarian, who want to make money currency that is outside the jurisdiction of any kind of state government. But in a curious kind of way, I could imagine governments mandating blockchain or crypto-currency, because, even though there’s anonymity, every single transaction in that system is on the public record. I’m not going to say that’s inevitable, but we certainly, over time, we’ve evolved less tolerance for opaque transactions, and so the logical extension would be that we would head toward making more and more of those transactions public.

That brings us back to something we were talking about a little earlier — the way that particular design choices and coding choices can have massive effects on how people actually end up living. I was interested to see, strung through your book, a number of what I thought of as alternative paths for the internet — there was the false promise of virtual reality, which we’re only now getting to, and there was the period when Second Life seemed like it was going to be a really big deal. I wonder if you feel, looking back on the history of the internet, if there are any paths that you wish we had taken, and how inevitable you think the internet we have now was from the beginning.

Well, I was terribly dismayed by the heavyweight way the music and recording industry closed down file-sharing — Napster and all those. Because I think that in this alternative universe, they could’ve come up with ways to monetize them rather than just obliterate them. And that’s a shame, because I think it would have been a much more interesting, and a much more dynamic and productive way — and we still don’t fully have that.

On the other hand, even without such out-in-the-open file-sharing, we are still obviously living in a kind of consumer paradise when it comes to culture, with so much being created. But there’s still a lot of anxiety about the ability to earn a living off of one’s creative, or semi-creative, work.

There’s definitely a challenge in the sense that the old business model, which was based around the precious copy — you needed to buy a copy of magazines, or a copy of a book — and now the copies are free. So you need to sell things that can’t be copied, and the economy shifts to the things that aren’t easily copied. I’m certainly evidence of that in the sense that, while I have a book coming out, I’m not making my living from the book, I’m making my living from the talks in foreign countries, like a lot of others. That can’t be copied. You can probably get a copy of the book somewhere online by now, somewhere on BitTorrent, I don’t know. But look at food. You can get food cheaply, but if you want to have a meal, and the whole spectacle and the aura of a chef, you’ll pay more for that.

Jaron Lanier has written endlessly about the wrong paths we’ve taken with the business model. We have — Nick Carr’s term — the digital sharecroppers, who are making all this content for Facebook. There are a lot of people who think that micropayments should be a part of this economy. That lament goes back to the days of Ted Nelson. I mean, Ted — Ted feels we made a wrong turn with the 404 one-way hyperlink, and that it was all downhill.

Those ideas are connected, right? He thinks micropayments would have been much easier with two-way links.

Right, right. And we may come back to something like that, where we have forwarding of credit.

In a few places in the book, you talk about how early it still is. We have this tendency to think of the internet as basically mature. But you compare it to the Cambrian explosion in evolution, which is right at the crack of dawn.

But it does get hard to change the fundamentals. Five-hundred years from now we’ll still have TCP/IP. We’ll still have some of the most rudimentary elementary elements of the internet; they will still be intact. It’ll be like the electron-transport-chain cycle in the cell, which hasn’t changed in a billion years. There are kind of amendments to it, but it’s there.

And the internet is the same way. I’d almost make a bet that TCP/IP would last longer than the U.S. Constitution in its current form. It just gets harder to change some fundamental things the farther you go along.