One of the big changes in election coverage that emerged in 2016 was the widespread use of predictions expressed as probabilities. Once the private vice of data geeks, just about everyone in the business of handicapping presidential elections used probabilities that year. And it became increasingly obvious that elements of the news media, and the public that relies on media for interpretation of political developments, often didn’t get it.

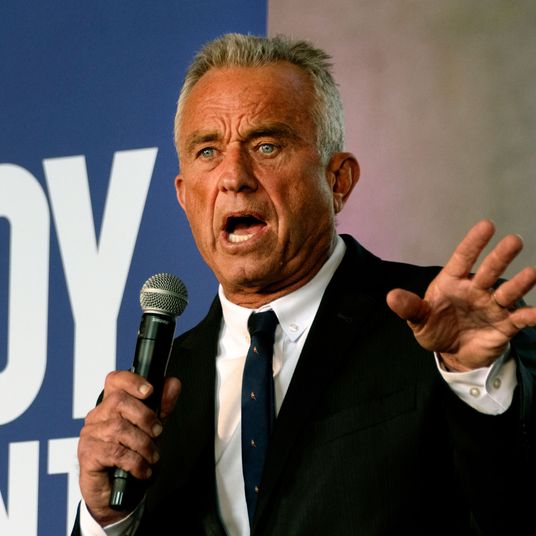

FiveThirtyEight’s Nate Silver, an early adopter of “probabilistic” predictions, explained the problem in his vast postelection analysis of why so many people were surprised by Donald Trump’s election:

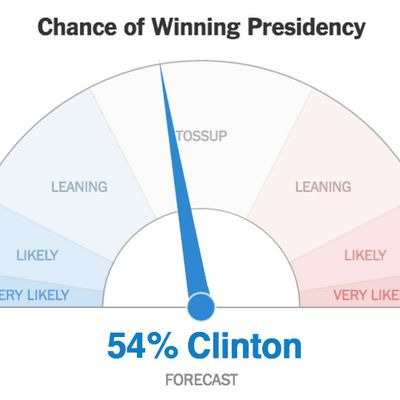

The New York Times’ media columnist bashed the newspaper’s Upshot model (which had estimated Clinton’s chances at 85 percent) and others like it for projecting “a relatively easy victory for Hillary Clinton with all the certainty of a calculus solution.” That’s pretty much exactly the wrong way to describe such a forecast, since a probabilistic forecast is an expression of uncertainty. If a model gives a candidate a 15 percent chance, you’d expect that candidate to win about one election in every six or seven tries. You wouldn’t expect the fundamental theorem of calculus to be wrong … ever.

Speaking of the Upshot model, on Election Day itself its proprietors tried to keep probabilities in perspective:

A victory by Mr. Trump remains possible: Mrs. Clinton’s chance of losing is about the same as the probability that an N.F.L. kicker misses a 37-yard field goal.

Football fans know such whiffs happen often enough that one should never assume they won’t happen at any particular moment. And it should be noted that the Times’ 85 percent Clinton win probability wasn’t universally shared: FiveThirtyEight, for example, got grief from quite a few progressives for projecting the probability of a Clinton win at around 70 percent down the stretch. But Nate Silver consistently argued that Clinton’s polling lead made a Trump win in the electoral college as something within (to borrow a term from meteorology, which also deals a lot with probabilities) “the cone of uncertainty.”

Clearly, though, there was a lot of confusion about probability-based predictions, and now we have a study that not only confirms this confusion but suggests it might have an impact on voter turnout by boosting confidence in projected “winners” to unrealistic levels. As reported by Pew’s Solomon Messing, three academics conducted experiments with probability-based and vote-share-based projections of political contests, and discovered rather unambiguously that the former led to a degree of confidence in the outcome that far outstripped the data:

Those exposed to probability forecasts were more confident in their assessments of which candidate was ahead and who would ultimately win, compared with those shown only a projection of the candidate’s vote share. In every condition across the board, participants provided inaccurate accounts of the likelihood of winning.

The findings suggest that media coverage featuring probabilistic forecasting gives rise to much stronger expectations that the leading candidate will win, compared with more familiar coverage of candidates’ vote share.

And there was, Messing noted, a lot more coverage of probabilistic forecasting than in the recent past:

The number of articles returned by Google News that mention probabilistic forecasting rose from 907 in 2008, to 3,860 in 2012, to 15,500 in 2016.

But does it matter, beyond the reputations of the number crunchers who seem to have gotten their predictions “wrong” (a questionable judgement to make about probabilistic forecast, when you think about it)? Maybe so:

How people interpret statistics might seem inconsequential – unless these interpretations affect behavior. And there is evidence that they do: Other research shows that when people aren’t sure who will win an election, they vote at higher rates, though not all studies find the same effect. That could mean that if citizens feel assured that the election will almost certainly break one way after reading probabilistic forecasts, some may see little point in voting.

There are plenty of other explanations available for disappointing Democratic turnout in 2016, but the incredible tidal wave of shock in Democratic circles at Trump’s victory suggests a lot of misperception of the actual odds — based not on the polls (which were actually pretty accurate in predicting the national popular vote), but perhaps, to some extent, on a misunderstanding of how probabilities work. We’ll probably never know whether that misunderstanding really affected turnout patterns. But it would be nice before the next presidential election if news media would develop better ways of explaining data and the limited extent to which they offer certainty in a competitive contest. And if only to avoid triggering horrific memories among Democrats, maybe the New York Times should revise that predict-o-meter. It was and remains a perfectly valid instrument, but for many Americans it looks like the Hand of Doom.