Yesterday afternoon at its annual I/O developer conference, Google delivered a mostly standard slew of announcements, plotting out its course for the future. It touched on most of the topics you’d expect from a slew of marquee announcements from Google: impressive demos of AI advancements, new hardware iterations, upcoming software tweaks and company initiatives. There’s a new version of Android coming this year, and there’s a new Google Home device to let you watch TV in the kitchen. Google can now transcribe and translate words in real time. Did you know most American children get their first phone by age 8? Does that scare you?

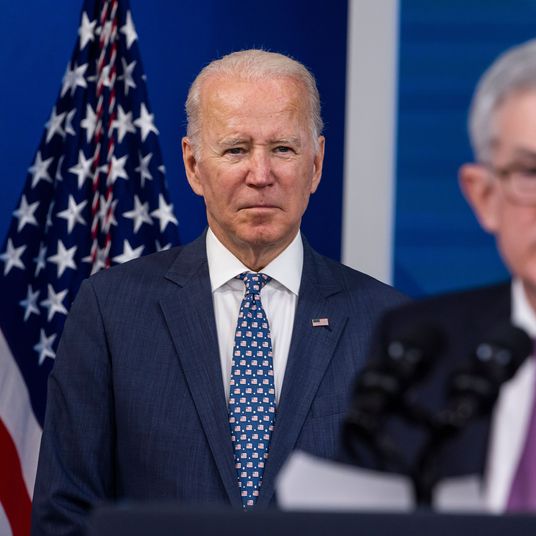

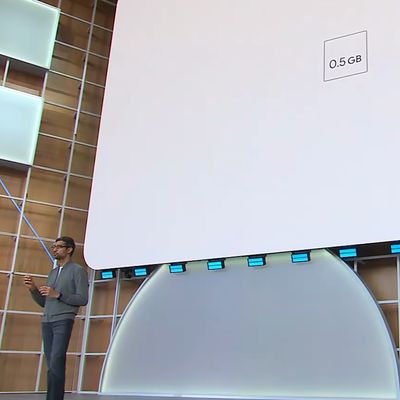

Perhaps the most surprising announcement, though, was one that breezed by quickly, not a flashy sci-fi-come-to-life demo but a smaller-step advancement. But the implications are substantial. About a half hour into the presentation, CEO Sundar Pichai came back onstage to talk about how complex the models that power Google’s Assistant are. The Assistant, if you need a refresher, is like Google’s version of Siri — a program that processes natural-language commands and responds accordingly.

The Assistant needs to be able to break down a voice snippet into syllables, stitch those syllables into words, and then figure out what those words actually mean in context. Doing this, Pichai explained, requires a lot of data and numerous complex computational models. That’s why voice assistants send the commands from your device to a remote server, which can process it much faster. In the past, Pichai said, the Google Assistant software was a program roughly 100 gigabytes in size. Today Pichai announced that, thanks to some ingenuity, the Assistant’s footprint has been greatly diminished: it’s now roughly half a gigabyte in size.

And that means that the Assistant no longer needs to send your voice to a remote server. Now it can process commands entirely on its own. “Think of it as putting the power of an entire Google data center in your pocket,” Pichai told the audience. It would make the Assistant, he said, “so fast that tapping to use your phone would seem slow.”

The onstage demo seemed to bear that out, as the demonstrator quickly ran through a series of voice commands on her phone, from simple requests like “take a selfie” to more advanced requests like “get a Lyft ride to my hotel.” The latter request elicited an audible response from the audience, presumably because Google’s software was able to correctly process what “my hotel” meant specifically. (Granted, these on-stage demos are heavily rehearsed and sometimes involve sleight of hand, so take it with a grain of salt.) As she spoke, transcribed commands appeared in the lower-right corner of the phone’s screen, confirming what the device was interpreting. Unlike Siri as it exists now, popping up only when summoned and taking over a user’s entire screen, the Assistant seems more designed to be omnipresent as someone uses an Android phone.

The announcement — that the Assistant can process commands entirely on-device — feels significant for a few reasons. For one, Google has never been a company for which “on-device” was an important quality of its software. It’s success has been predicated on user’s sacrificing control for the convenience of the cloud. Chromebooks almost become paperweights if they can’t connect to Wi-Fi. Consumers are, I think, comfortable with the idea that using a Google service means uploading data to a server and letting it be analyzed remotely. That’s how it’s always been. For Google to relinquish that advantage for the sake of user convenience and lower latency appears to be a serious strategic shift and an admittance that there are still some things that the cloud can’t do.

The change also feels like a considerable shot at Google’s smartphone rival, Apple. For years, Apple has hawked on-device processing as part of one of its major selling points — privacy. Apple doesn’t, for instance, upload biometric information used to unlock your device to the cloud. The implication of Apple constantly talking up this point is that there is some data that is too precious to handle remotely and you shouldn’t trust companies (i.e., Google) to do it. Despite this attitude, as of now, Siri requires an internet connection to process commands (though that may change, according to a recent Apple patent filing).

As Google prepares to integrate the Assistant more thoroughly throughout the user experience, however, it needs to establish those privacy bonafides. In order to get people to actually use the Assistant as Google intends, it needs to make users feel like they are speaking only to their phones, and that their voice won’t be carried thousands of miles to a data center. The faster speed of the next-gen Google Assistant is one thing, but it’s the security that arguably matters more. These two aspects, speed and security, combined, make the idea of using the Assistant for most phone tasks seem like far less of a novelty than it has been in the past.

Lastly, Google’s announcement gives a faint glimmer of hope to the idea that hardware still matters. In recent years, large tech companies like Google, and Apple, and Microsoft, and Amazon, have shifted to a “service” business model. It’s one that requires users to constantly stay connected to the mothership in order to complete tasks and the importance of any particular device’s hardware specs have diminished as a result. Even so, Google’s announcement today could be seen as a sly admittance that for certain reasons – mainly speed and privacy – having a device not reliant on a persistent online connection can be an advantage.